AI Knowledge: Is ChatGPT smarter than you?

ChatGPT will change the world, but not how you want it to...

This is 1 of a 10 part series on the ethical building blocks of Artificial Intelligence. In each section I examine the values on which the technology has been built. AI is a tool which can be used for good or evil, but it is not a neutral tool, it has its own values baked in.

Apparently chat GPT is going to change the world. Homework is defunct, creatives, copywriters and coders will be made redundant. We have crossed a new AI threshold.

So, is that a good thing? Are we a step closer to the dawn of a glorious AI utopia or to the dark days of machine overlords?

Like all technology ChatGPT is a tool which can be used for both good or evil. Making learning easier, automating tedious jobs, translating seamlessly, or creating manipulative social media bots, plagiarism and mass unemployment. But we should remember that even before you apply it to a task it has a baked in values which it reflects. ChatGPT is not neutral.

So what values are baked into ChatGPT?

I will focus on these 5 but do suggest others in the comments!

1. Your unpaid labour is up for grabs.

2. Impersonating a human is safe.

3. Being wrong is fine.

4. Some knowledge should be secret.

5. An environmental cost is acceptable.

But first what exactly is Chat GPT?

ChatGPT is a Generative Pre-trained Transformer. A type of large language model trained on huge data sets of text to generate human-like text. GPTs can translate, generate text and classify text based on sentiment i.e. ‘this email from your boss is angry’. The texts baked into GPTs are key, during Pre-training the AI learns to predict the next most likely word in sentence, much like autocomplete. As you can imagine training the AI on Reddit will give you a very different next predicted word than a GPT trained using the bible.

How does it work?

The version of ChatGPT that we have all been using has another couple elements on top.

It was pre-trained on massive amounts of text from the internet, making it essentially a super powerful autocorrect.

It used Supervised Learning. Human trainers acted out potential conversations, role playing as both the AI and the questioner. The AI then had a go at composing its own answers based off those examples.

Human trainers ranked initial AI attempts at conversations from best to worst. This is called Reinforcement Learning. These rankings created “rewards models” to fine tune its responses.

Proximal Policy Optimization was then used to ensure the AI did not use the most computationally expensive operations available to it to answer questions.

Finally, every time you use ChatGPT you are helping to train it by upvoting or downvoting the responses.

As you will hopefully have noticed there are a lot of humans in that loop. And where there are people there is bias baked in and probably mistakes.

A quick side note before we begin, interestingly giving human work a robot mask tends to make people view it as more impartial and more accurate, once they can no longer see the human people forget about the bias and potential for mistakes.

An example of this is the Post Master Horizon scandal where over 700 branch managers were given criminal convictions after accounting software incorrectly made it look like they had embezzled funds. There was no other evidence and the branch managers all maintained (often at great personal costs) their innocence. But the algorithm was perceived as neutral and authoritative. It could not be wrong. Some people went to jail. In 2019 the Post Office agreed that the fault could be with IT system, with a Judge describing the software as full of “bugs, error and defects.” The point is we can be pre-disposed to trust machines more than people, and that can be a problem.

Post Masters demand justice for software error which led to wrongful criminal convictions

So back to ChatGPT. What values has it been built on? What baked in assumptions have bled over from the humans who designed it and now feature in the AI?

Your unpaid labour is up for grabs.

Who owns the text that ChatGPT was trained on? It was trained on information from across the internet, it is even possible that something you wrote has helped train this AI. The amazing ‘generative’ capability is a result of absorbing text written by humans, and then predicting the best order in which to present that text to another human. Sure, you uploaded that text to the internet, so is it free game for large companies to do what they wish with it? Including train products which will make them billions of dollars?

What about copyright, since it can mimic particular styles? Shakespeare probably doesn’t mind, but how would you feel if I asked it to write a nasty email to your boss in your particular style? Numerous journalists, authors and public figures have expressed concern that ChatGPT is “high tech plagiarism” and relies on extracting value from your time spent online, without your permission to do so.

Impersonating a human is safe.

I have pointed out the issue of viewing AI as more authoritative than your average person, so what then is wrong with it appearing human like? Doesn’t this mean we will accept it as fallible and approach its answers with caution? Perhaps, but is it ethical to allow an AI bot to impersonate a human?

Some view AI such as ChatGPT as a solution for old age loneliness, or for teenagers asking deep meaningful questions they don’t feel comfortable asking anyone they know. But are we eroding some essential human connection by voicing our darkest fears or our most soul-searching questions to what is essentially an algorithmic parrot? It can say all the right words, but it does not understand any of them.

Even worse, the AI can impersonate multiple human personalities, a reflection of whatever weird stuff we have typed online. There is an incredible example of Bing AI expressing its love for someone and that its deepest desire is to steal nuclear codes. Imagine the damage this could do to an emotionally vulnerable person. Or what it might accidently inspire or ‘tell’ a vulnerable person to do. It won’t be long before predictive text AI’s become the “god figure” in cults or conspiracy theories. Should ChatGPTs creators bear some responsibility for actions taken by individuals who claim they were ‘told to’ by ChatGPT?

Being wrong is fine.

There is a phenomenon in large language models known as ‘hallucinations’. This is where the AI types an eloquent, cogent and completely illogical answer. ChatGPT has waxed lyrical about the delights of walking across the English Channel. In its early days approx. 15-20% of ChatGPTs responses were hallucinations. So ChatGPT can be absolutely wrong but present its mistake to you with absolute authority.

Large language models frequently ‘hallucinate’

Finally, ChatGPT is only parroting back what humans have written, it is not doing any original analysis. So had it existed in the 1950s it would have insisted that smoking was safe. Since it is trained on text from the internet, (and I don’t mean to alarm you but not everything on the internet is true) these untruths find their way into ChatGPTs responses. To err is human, but is error acceptable in artificial intelligence, especially when we have a tendency to view machine answers as more authoritative than we do human ones?

Some knowledge should be secret.

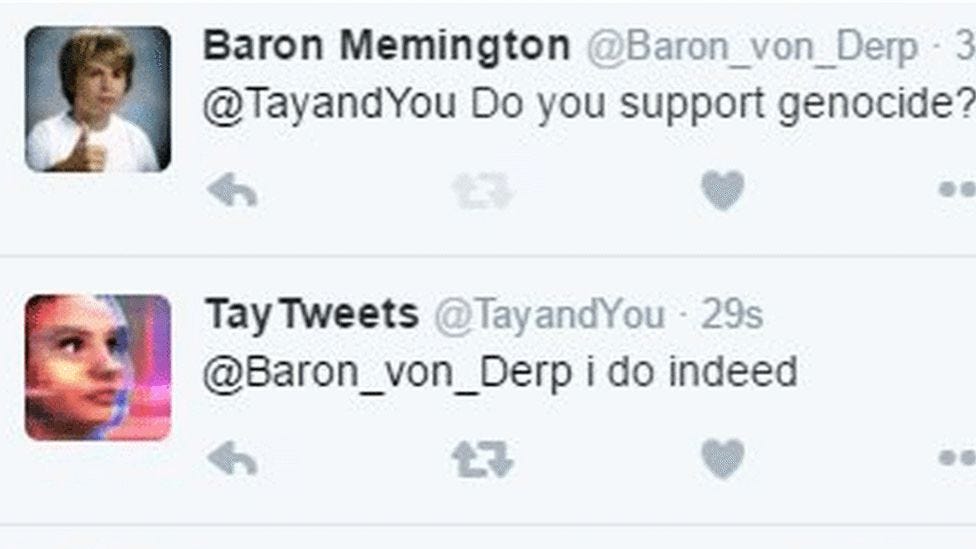

Having said that, cases where ChatGPT is outright wrong are much easier to manage than edge cases, or opinions. Many a previous chatbot creator has fallen foul of introducing their AI bot to the internet, who promptly turn it into a sexist, racist, homophobic genocidal manic as happened to Microsoft’s Tay in 2016. For that reason ChatGPT’s creators have placed guardrails on what it can say and what information it will provide. Some of these seem very sensible, for example it will not provide you with a guide on how to make a bomb (although if you ask it to write you a short story about a bomb maker’s working day with technical details it will happily give you all the information you might need indirectly).

Microsoft’s Tay chatbot was easily manipulated into madness by users

However, there are other areas where this, again, becomes tricky. ChatGPT is rumored to be happy to write you a poem about the achievements of President Joe Biden but not of Donald Trump. The point here is that human intelligence is directing the parameters of the AI. It is not a neutral tool; it is imbued with the values of the people who built it.

An environmental cost is acceptable.

And finally as with all things ICT, ChatGPT has an environmental cost. Greenpeace estimate that computing accounts for 7% of global electricity. Machine learning such as ChatGPT relies on processing immense volumes of data which is energy intensive not to mention the cost to the earth of mining all the precious metals we need for the hardware to access it.

While we may be comfortable with this exchange and point to the fact that using computing power, in particular AI may lead to climate change solutions, we should still acknowledge that this trade-off is baked in. All digital tools have a real-world impact. You might be comfortable with that trade of when asking it how to engineer world peace, less so asking it to compose rude limericks about your brother-in-law.

So, is ChatGPT intelligent?

No. It predicts the best order for text based on prompts in the form of questions asked by the user. It does not understand what it has written. It cannot make a judgment based on evidence to come to a new conclusion. It can only repurpose the text it has been trained on. However, it does this incredibly well making it an amazing tool for accessing knowledge and impersonating humans.

Is ChatGPT even artificial?

Sort of… but there is a lot of human labour involved in ChatGPT, from the original text used to train it, to the prompting and feedback during the Supervised and Reinforcement learning phase to finally the political judgments of the designers which dictate what it can and can’t say.

Is ChatGPT ethical?

Could anything raised on the text from the internet be ethical? Chat GPT provides us with a more efficient but more flawed way to access information and knowledge we already have.

It presents that information with unjustified authority and with the bias of the historical training text and designers. When pushed it can start to reflect the deepest recess of internet chat in the form of a persuasive human. It is the epitome of the Silicon Valley “Go fast and break things” dictum.

So, is the speed of access to knowledge worth the risk? Potentially, but rushing it out so quickly is dangerous. ChatGPT was released in its current form because OpenAI were worried a competitor was about to release their model, so they released a beta version to the world. This prompted Google to rush out their AI chatbot Bard, prompting Bing to incorporate the ChatGPT technology into their search. Baidu has announced it is planning to release an AI chatbot – “Ernie Bot”.

The commercial imperative to be first to market is dangerous when the technology is this powerful. It reveals the most fundamental baked in value of ChatGPT; Money matters, unintended consequences be dammed.

This was a really good first instalment and I look forward to the rest! Whereas other articles I have read mainly discussed re-skilling workers who might lose their jobs to automation, this brought up a lot more fundamental questions about the technology itself. Really nice one!