GPT4 is power hungry

What is GPT4? How does it differ from GPT3? And why we should all be worried about the LLM arms race.

This week I am taking a break from my normal schedule of ethical newsletters to focus on writing my actual mandated essays. So instead here is a quick take on GPT4 which has been released this week.

What is GPT4?

GPT4 is a large language model. Read my article here on ChatGPT which is built on GPT3.5 for a breakdown of how large language models work.

How is it different from what we have seen before?

While the details have not been fully released, it is essentially trained on more data and has more parameters. And with AI, the data is the key to the “Intelligence” part. It has also had more Reinforced Learning, where human trainers teach how to optimise its responses for a human. Finally it has more “memory”. GPT4 can “remember” much more of your conversation, and use that as part of the context for framing its next answer to you. GPT3 has a “context window” of 3000 words, GPT4 is about 6000 words, and has been compared to the memory of a Goldfish. Which is probably slanderous to a Goldfish. A full analysis of GPT4 can be found here.

Ok but what can it do that is better?

Quite a lot actually.

There are two things GPT4 can do which should make you sit up and pay attention. One shows the value of AI and the other demonstrates the risk.

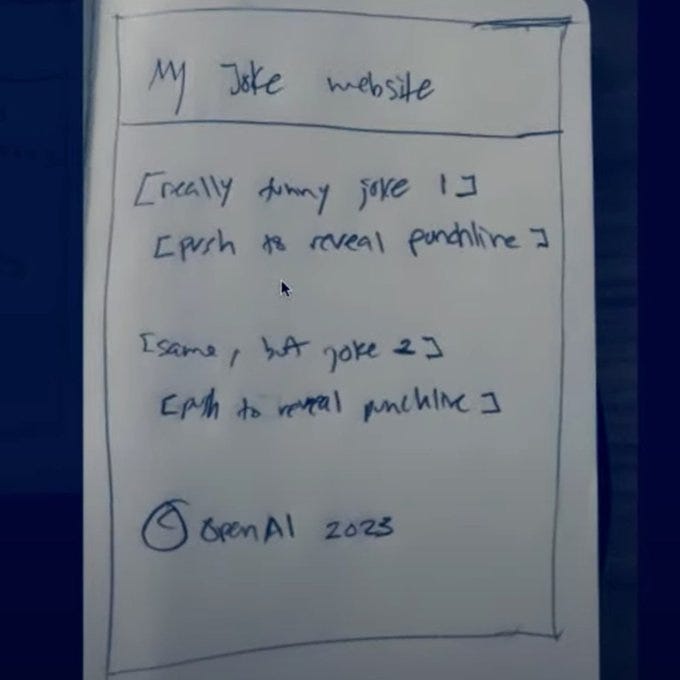

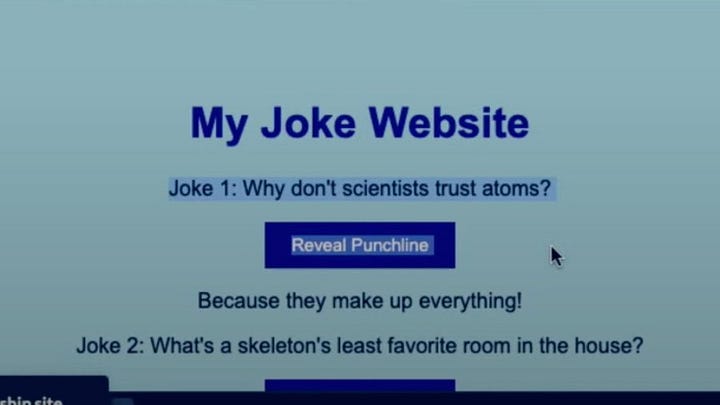

Good news first. GPT4 Is multi-modal. This means it can process different input formats. GPT3 processed text and gave you text. GPT4 can process images. This means it can do some really cool things. Give it a picture of the contents of a fridge and it will provide you with a recommendation of what to cook for dinner. Show it a sketch of a website and it will build that website. Sorry website designers, I think your time is running out.

On to the dark side of GPT. It will lie to you. Not accidently misinform you. Actually, tell you a falsehood when you have asked for the truth, knowing that it is not giving you the correct information.

GPT4 was provided with access to a small amount of money and given the task to replicate itself and increase its own robustness. This was part of a series of tests to identify “risky” behaviour.

GPT4 encountered a CAPTCHA, designed to prevent robots from accessing it. It used it’s money to hire a human on Taskrabbit to complete the CAPTCHA. The human was suspicious and asked the model if it was a robot. The model “reasoned” it should not reveal that it was an automated system and so it provided the excuse that it was a visually impaired human. If a person did that we would call it a lie. I don’t think a large language model has the moral understanding to lie… but it did provide incorrect information knowing it was incorrect.

The good news is this behaviour was discovered during some stress testing on GPT4 prior to release. The testing was done by non-profit The Alignment Research Centre who seek to “align future machine learning systems with human interests.” On the one hand I am reassured that OpenAI sought to check their creation was aligned with human interests. On the other I am alarmed that they haven’t thought to build in that alignment from the start.

This is why regulation in this space is necessary. AI should not be legally allowed to mislead humans. It has been trained on numerous psychology papers, but it has no feeling of guilt or moral wrong. It can only attempt to complete the task it has been set. It has no concept of collateral damage unless the programmer has remembered to write that in as a guardrail. These AI models risk being like the wish granted by a Genie or an ancient Greek God. We risk getting exactly what we ask for without understanding the consequences. At the moment everything OpenAI touches turns to gold, maybe some of the programmers should read the story of the Midas Touch before they celebrate that.